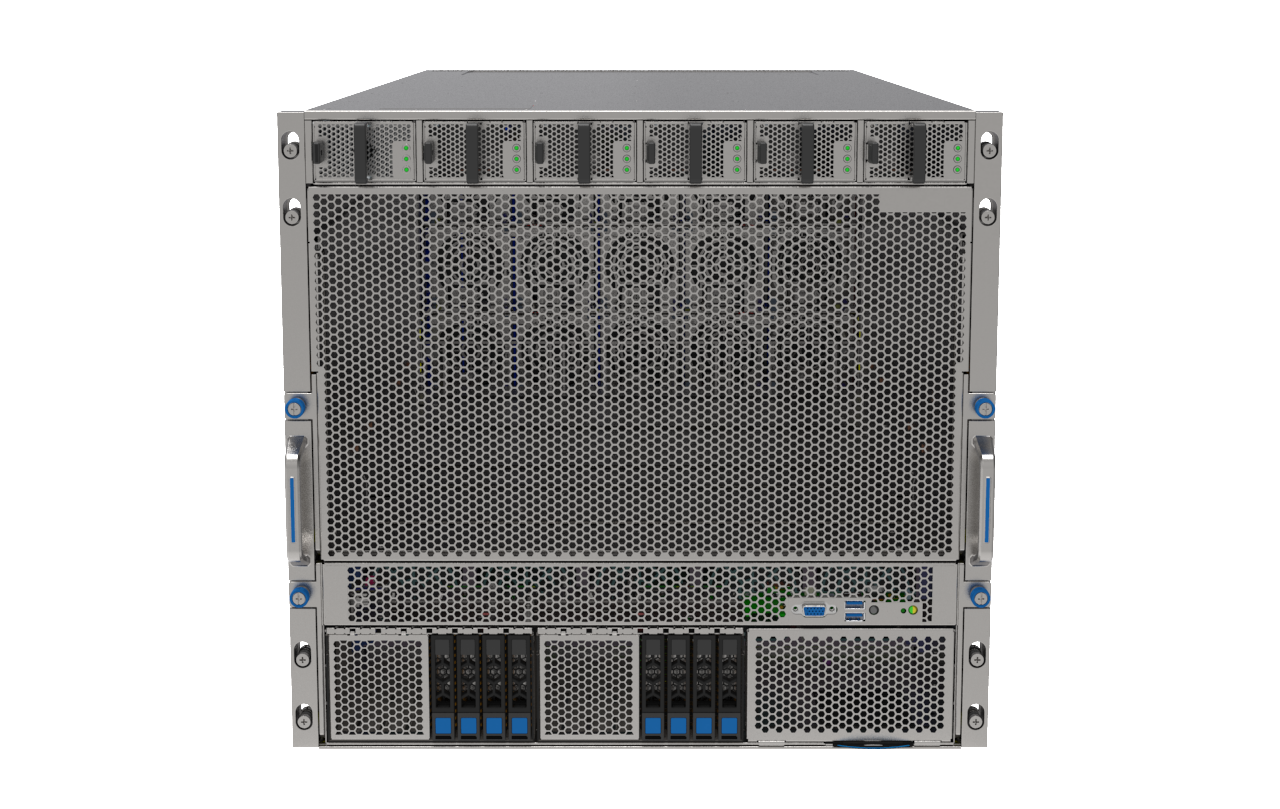

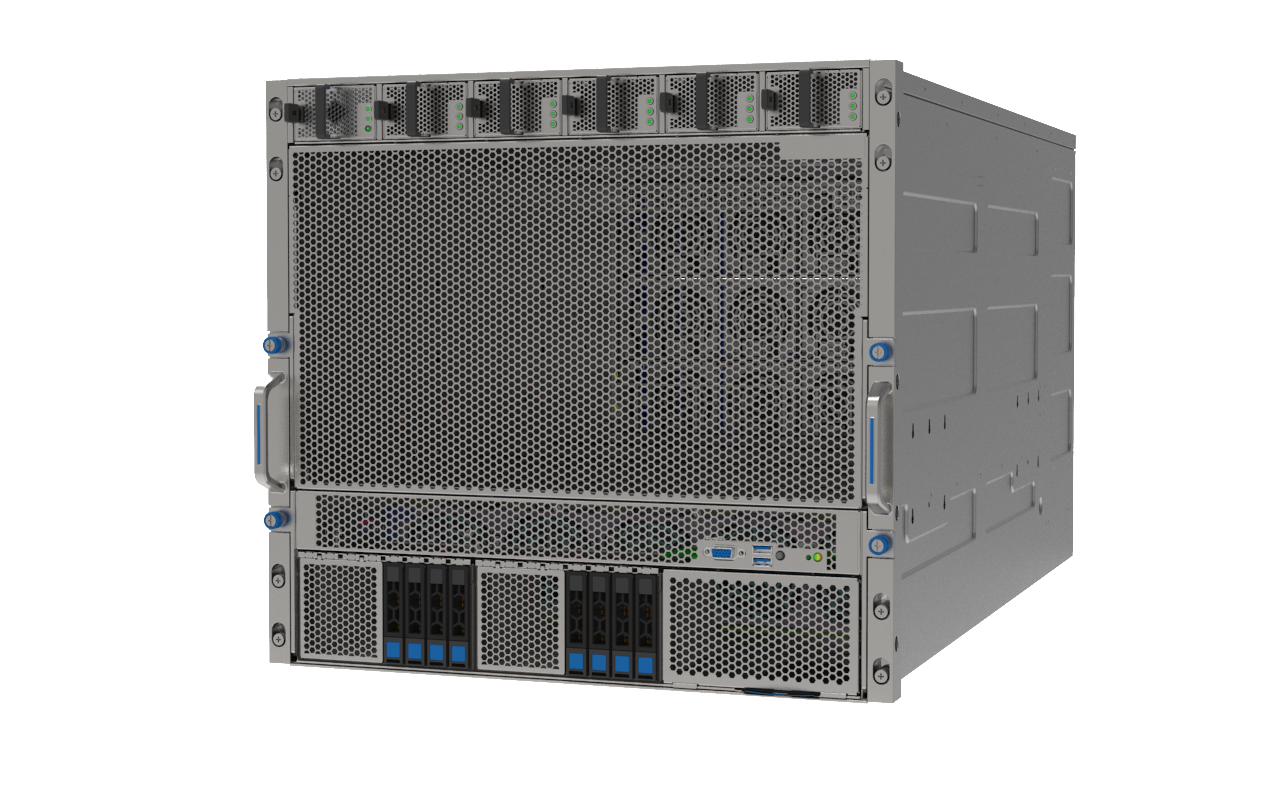

P9000G6 (AC)

- Support dual 4th and 5th Gen Intel® Xeon® Scalable processor

- Support NVIDIA HGX™ B200 system with air cooling solution

- Best GPU Communication with NVIDIA NVLink™ Bridge

- Support DDR5 DIMM, 4800/5600 MT/s @ 1DPC, 4400 MT/s @ 2DPC

- Support up to 12 U.2 NVMe SSD

- Inventec-designed switchboard provides maximum bandwidth for NVIDIA GPUDirect® RDMA

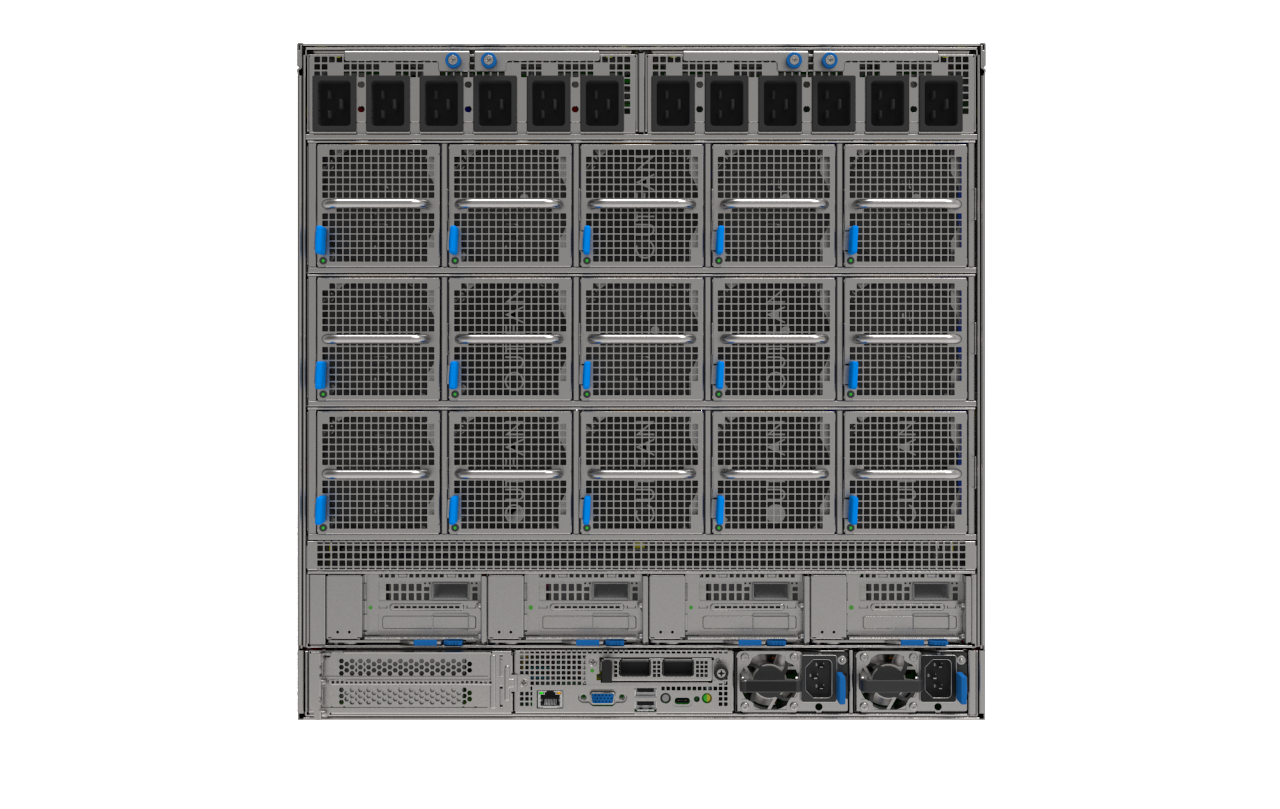

- Increase power efficiency and reliability by decoupling 12V and 54V power source

- Modular architecture to provide customer the best performance platform

With the exponential growth of AI applications and the rising demands of HPC workloads, modern infrastructure must deliver breakthrough performance, scalability, and energy efficiency. The Inventec P9000G6 (AC) addresses this demand with a design purpose-built for next-generation AI training, scientific computing, and data-driven workloads. By supporting the latest NVIDIA HGX™ B200 architecture and cutting-edge Intel® Xeon® Scalable processors, it empowers enterprises to keep up with the evolving demands of modern infrastructure.

An Extremely Flexible AI Server Ready for the Next Wave of AI and HPC

The P9000G6 (AC) is built to meet the evolving demands of AI and high-performance computing with a flexible and powerful 10U design. By combining dual Intel® Xeon® Scalable processors with the latest NVIDIA HGX™ B200 8-GPU system, it delivers the compute capacity needed for today’s most intensive AI training and inference workloads. The platform supports up to 12 U.2 NVMe SSDs, strategically placed under the same PCIe switch as the GPUs to keep large datasets physically close and enable optimal NVIDIA GPUDirect® Storage (GDS) performance. A carefully engineered air cooling solution ensures reliable thermal performance, even under sustained heavy GPU loads.

Designed for Seamless Acceleration and Efficient Data Movement

To keep pace with growing model complexity and data volume, the P9000G6 (AC) supports high-speed DDR5 memory, delivering up to 5600 MT/s at 1DPC and 4400 MT/s at 2DPC. NVIDIA NVLink™ Bridge technology enhances communication between GPUs, enabling them to work together more efficiently on large-scale parallel tasks. At the system level, an Inventec-designed switchboard ensures that data moves quickly and smoothly between CPUs and GPUs via NVIDIA GPUDirect® RDMA, helping eliminate bottlenecks and unlock the full performance of the platform.

Designed for Serviceability

Continuing Inventec’s commitment to customer-centric engineering, the P9000G6 (AC) adopts a modular architecture that simplifies system maintenance. Tool-less trays and component modules enable fast servicing of fans, power supplies, NICs, and storage. The separation of the host and GPU modules further optimizes airflow and thermal zoning, while providing clear physical segmentation for operational convenience.

2x M.2 SATA SSD or NVMe SSD

2400W 12V PSU, support 1+1 redundancy

5x 8056 Fan for CPU cooling